La mia soluzione era aprire manualmente i file BSON (con python), trovare i documenti di grandi dimensioni e rimuoverne una parte, quindi scrivere l'oggetto BSON in un nuovo file BSON e caricare il file BSON modificato, che è stato archiviato correttamente in mongo.

Questo non soddisfa il mio desiderio di poter caricare il db scaricato sul sistema senza cambiarlo!

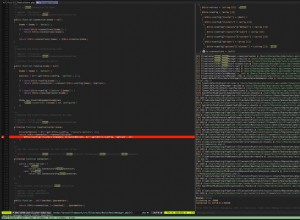

Python3:

import bson

from pprint import pprint

def get_bson_data(filename):

with open(filename, "rb") as f:

data = bson.decode_all(f.read())

return data

def report_problematics_documents(data):

problematics = []

for item in data:

if is_too_big(item):

print(item)input("give me some more...")

input("give me some more...")

problematics.append(item)

print(f"data len: {len(data)}")

print(f"problematics: {problematics}")

print(f"problematics len: {len(problematics)}")

def shrink_data(data):

for i, item in enumerate(data):

if is_too_big(item):

data[i] = shrink_item(item) # or delete it...

print(f"item shrinked: {i}")

def write_bson_file(data, filename):

new_filename = filename

with open(new_filename, "wb") as f:

for event in data:

bson_data = bson.BSON.encode(event)

f.write(bson_data)

def is_too_big(item):

# you need to implement this one...

pass

def shrink_item(item):

# you need to implement this one...

pass

def main():

bson_file_name = "/path/to/file.bson"

data = get_bson_data(bson_file_name)

report_problematics_documents(data)

shrink_data(data)

report_problematics_documents(data)

new_filename = bson_file_name + ".new"

write_bson_file(data, new_filename)

print("Load new data")

data = get_bson_data(new_filename)

report_problematics_documents(data)

if __name__ == '__main__':

main()